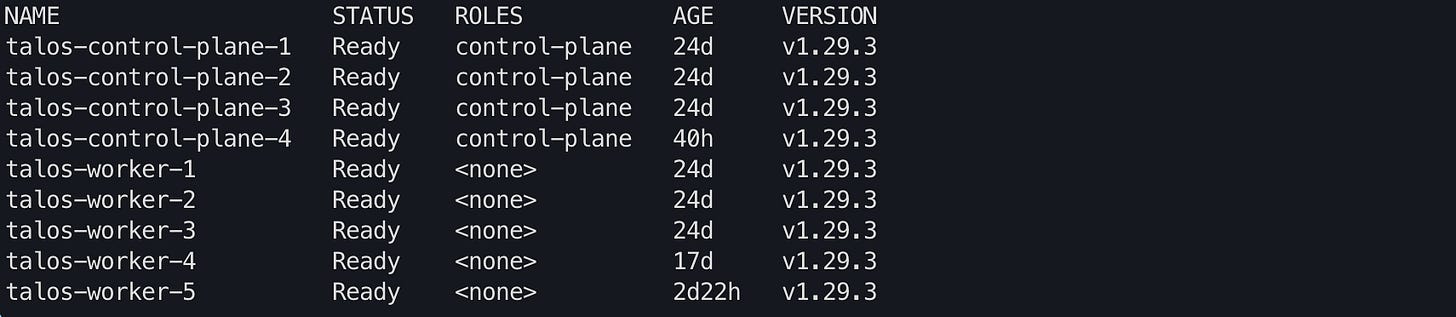

I’ve made a CI/CD-based blog before. At that time I was using an unstable 2-node LA (lowly available) “cluster”. Things are a lot different now:

This time there is also a constant need to deploy onto k8s for the many “projects” I am maintaining simultaneously.

The Motive

Ever pushed an image to the docker hub? Ever seen it have 1000 pulls in one day? What if I don’t want the world’s bots pulling down my container images? Easy solution, a self-hosted container registry.

That brings on another problem, I need to be able to push images to my container registry via CI builds. My registry is not internet-exposed, so I can’t use GitHub. Easy solution, self-hosted Git, and runners.

I am maintaining a few projects at once and want a container built, pushed, and deployed onto k8s inside my homelab.

Tools

There were different tools I came across while scoping what was needed for this to be completed, I ended up deciding on:

Harbor, an open-source registry that secures artifacts with policies and role-based access control, ensures images are scanned and free from vulnerabilities, and signs images as trusted. Also a CNCF graduate project.

Gitea, like any other Git, with CI/CD. The goal of this project is to provide the easiest, fastest, and most painless way of setting up a self-hosted Git service.

Other tech used (as always):

Docker.

Kubernetes.

ArgoCD (looking into Flux soon).

Overengineered? Maybe, but did you know homelabbing translates to overengineering?

Harbor Setup

To deploy Harbor you are going to need persistent storage and an ingress controller. Harbor’s helm chart is made to be deployed behind NGINX, but I use Traefik so you have to manually create an ingress. But besides that the deployment is simple.

helm repo add harbor https://helm.goharbor.io

helm fetch harbor/harbor --untarChange the configuration of the specified values in you values.yaml file.

Ingress rule Configure the

expose.ingress.hosts.core.External URL Configure the

externalURL.

Storage By default, a default StorageClass is needed in the K8S cluster to provision volumes to store images, charts, and job logs.

If you want to specify our StorageClass, set persistence.persistentVolumeClaim.registry.storageClass, persistence.persistentVolumeClaim.chartmuseum.storageClass and persistence.persistentVolumeClaim.jobservice.storageClass. Otherwise, the default StoragClass will be used.

In my case, I am using Mayastor.

helm install harbor-traefik harbor/harbor --values=values.yaml If you’re using Traefik, first create a certificate in the harbor namespace:

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: hardbor-cert

namespace: harbor

spec:

secretName: registry-tls

issuerRef:

name: letsencrypt-production

kind: ClusterIssuer

commonName: "registry.home.example.com"

dnsNames:

- "registry.home.example.com"

$ kubectl apply -f certificate.ymlNext create an Ingressroute for Traefik:

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: harbor-route

namespace: harbor

spec:

entryPoints:

- websecure

routes:

- match: Host(`registry.home.example.com`)

kind: Rule

services:

- name: harbor-portal

port: 80

- match: Host(`registry.home.example.com`) && PathPrefix(`/api/`, `/c/`, `/chartrepo/`, `/service/`, `/v2/`)

kind: Rule

services:

- name: harbor-core

port: 80

- match: Host(`notary.home.example.com`)

kind: Rule

services:

- name: harbor-notary-server

port: 4443

tls:

secretName: registry-tlsMake sure a DNS record is set in your local server or host file.

Accessing the container registry via the Docker CLI is simple:

docker login https://registry.home.example.comImages will have to be tagged with a specific naming convention for them to be pushed into the registry.

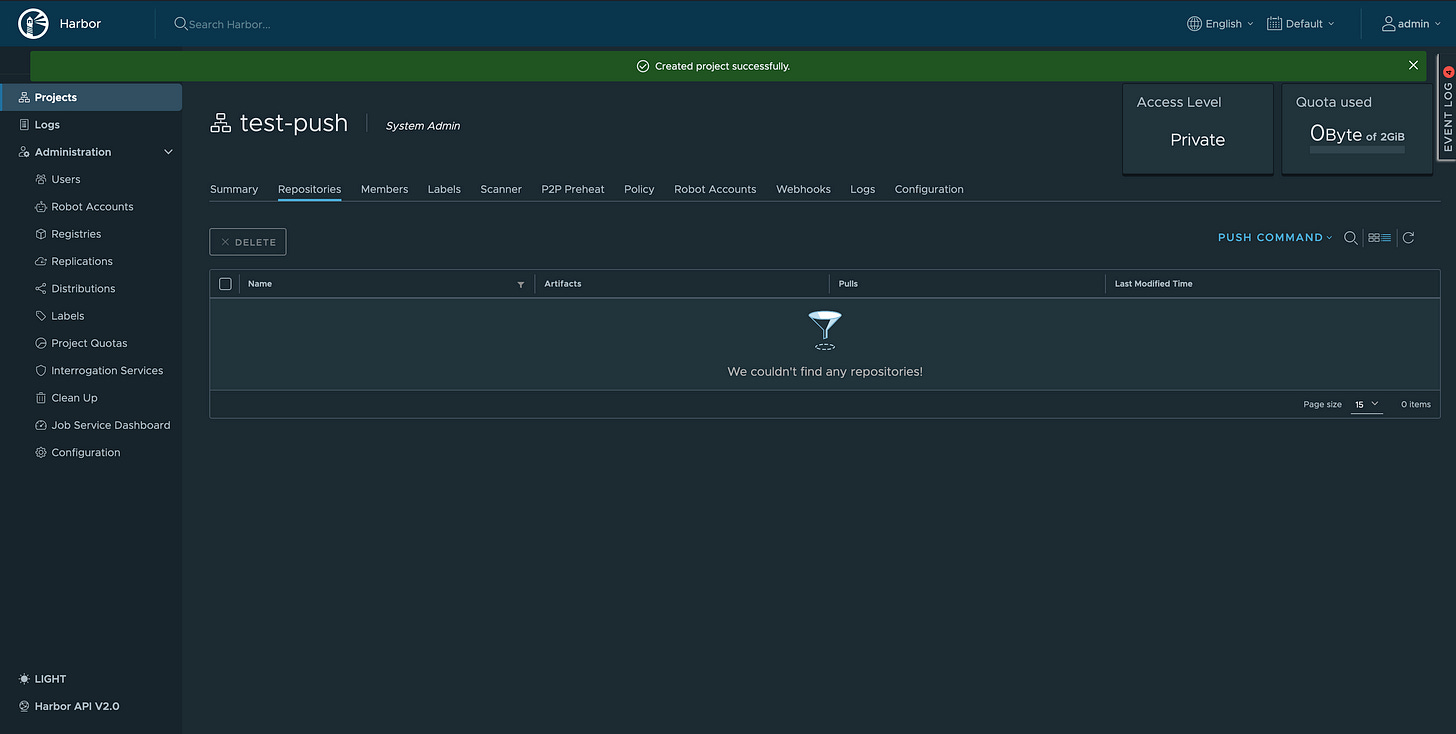

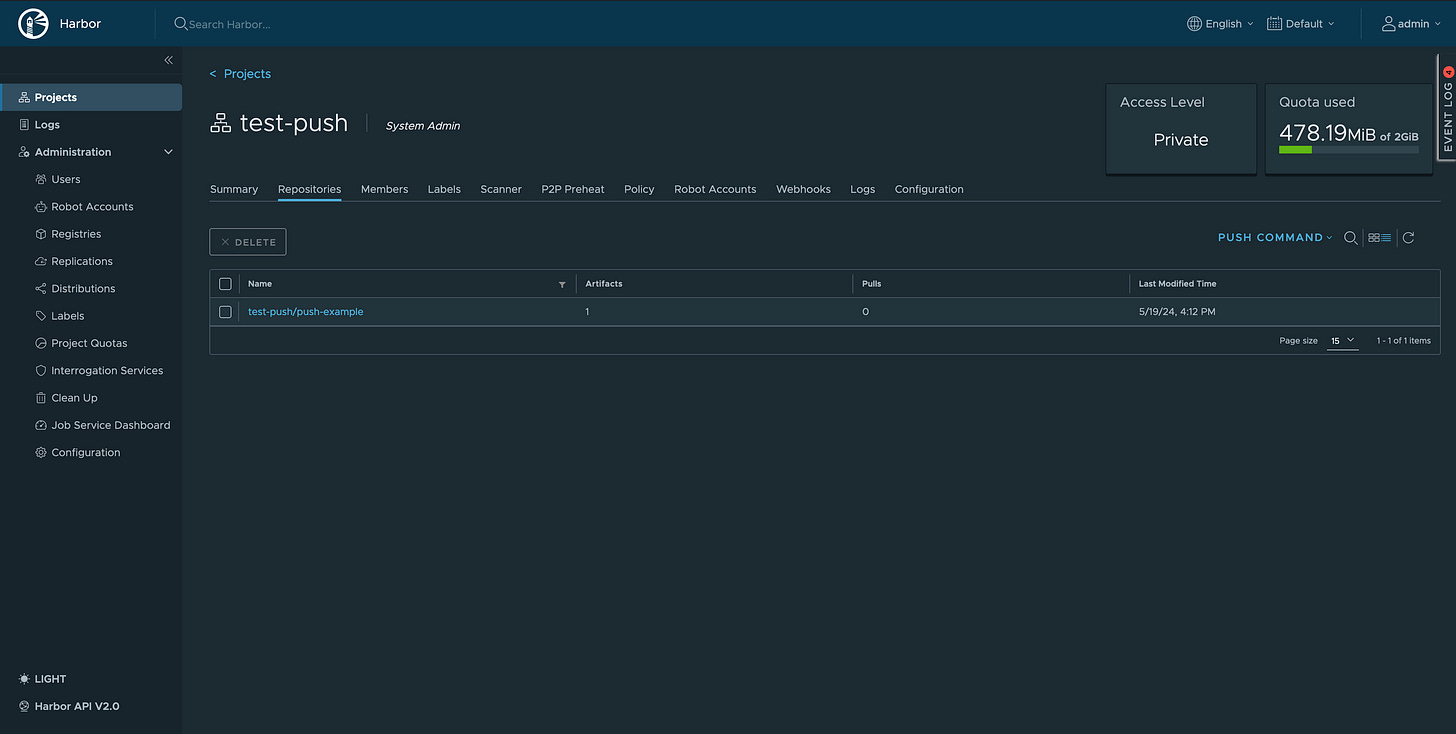

First, a project needs to be created inside of harbor. For example, test-push.

Then you can tag and push one of your local images:

docker tag backup_everything:0.0.1 registry.home.example.com/test-push/push-example:latestdocker push registry.home.example.com/test-push/push-example:latestGitea

If you run TrueNAS scale, the Gitea installation is pretty simple as it’s a native chart with TrueCharts. There is no need for me to walk through installing this because that is my experience. Just set the admin name, password, and ingress. If you do not have TrueNAS scale, please refer to the Gitea documentation for installation instructions.

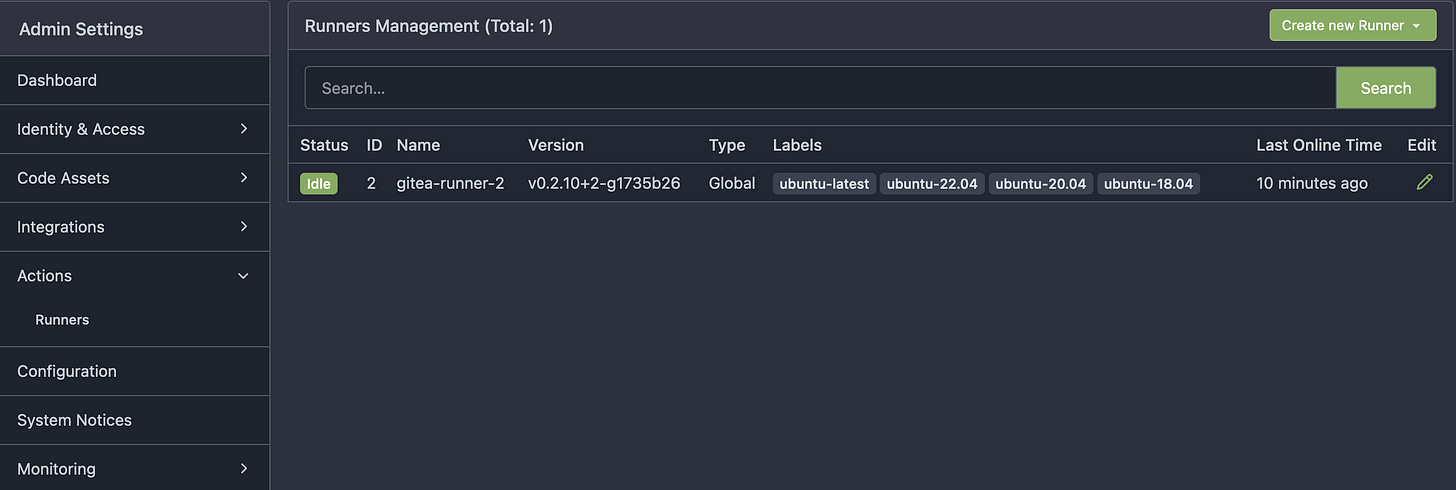

Once you have Gitea installed the first I’d do is make create a runner for your pipelines.

What is a runner?

In CI/CD pipelines, a runner is an agent or server that executes the jobs defined in the pipeline's configuration file. It can run scripts, build code, run tests, and deploy applications. Runners can be hosted by the CI/CD service or self-managed by the user.

Setup

The runner will be a Docker container (running on another host). When running Docker build and push actions, it will be running Docker in Docker, which I mention only because it is kinda cool.

Gitea home → Site Administration → Actions → Runners → Create new Runner → Copy your registration token.

mkdir gitea-runner-1

cd gitea-runner-1

vim docker-compose.ymlversion: "3.8"

services:

runner:

image: gitea/act_runner:nightly

environment:

GITEA_INSTANCE_URL: "https://git.home.example.com"

GITEA_RUNNER_REGISTRATION_TOKEN: "INSERT_REGISTRATION_TOKEN"

GITEA_RUNNER_NAME: "gitea-runner-1"

GITEA_RUNNER_LABELS: "ubuntu-latest:docker://node:16-bullseye,ubuntu-22.04:docker://node:16-bullseye,ubuntu-20.04:docker://node:16-bullseye,ubuntu-18.04:docker://node:16-buster"

volumes:

- ./config.yaml:/config.yaml

- ./data:/data

- /var/run/docker.sock:/var/run/docker.sockdocker compose up -dCI/CD

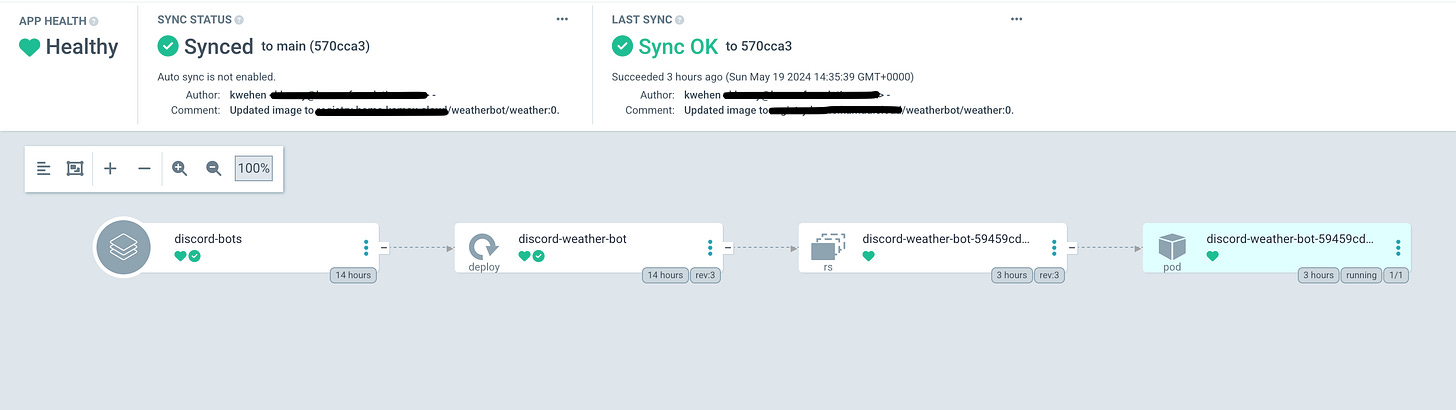

As stated, I’m using ArgoCD to do continuous deployment onto my k8s cluster. I have been forcing myself to learn GO recently, which I am loving. I have been messing around with writing a few Discord bots for my personal Homelab Operations server. One of these bots being for the checking the weather. I will go into my GO journey in another post, but for this I will explain deploying this and the other bots on my cluster.

In ArgoCD, is it best practice to have a repo with your code, and a repo with you manifest or values file that will be updated through CI. ArgoCD will check if there were changes periodically to the manifest repository and sync the cluster accordingly.

One my biggest challenges has been coming up with repeatable way to update the manifest repo through my CI actions, especialyl since uploading and downloading articfacts is not supported in Gitea. So that was the first challenge.

My solution? Creating a file from the tags of a Docker metadata step and passing that to another job via yq and {{needs.step.outputs.*}}:

- name: Create Tag Artifact

run: echo "${{ steps.meta.outputs.tags }}" > tags.txt

- name: Create Tag Output

id: tag

run: echo "::set-output name=tag::$(head -n 1 ./tags.txt)"

outputs:

tag: ${{ steps.tag.outputs.tag yq -i e ".spec.template.spec.containers[0].image = \"${{ needs.docker.outputs.tag }}\"" manifest.ymlname: ci

on:

push:

tags:

- '*'

jobs:

docker:

runs-on: ubuntu-latest

container:

image: catthehacker/ubuntu:act-latest

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Docker meta

id: meta

uses: docker/metadata-action@v5

with:

images: |

registry.home.example.com/weatherbot/weather

tags: |

type=semver,pattern={{version}}

- name: Set up QEMU

uses: docker/setup-qemu-action@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Login to DockerHub

uses: docker/login-action@v3

with:

registry: registry.home.example.com

username: ${{ secrets.REGISTRY_USERNAME }}

password: ${{ secrets.REGISTRY_PASSWORD }}

- name: Build and push

uses: docker/build-push-action@v5

with:

context: .

platforms: linux/amd64

push: true

tags: ${{ steps.meta.outputs.tags }}

- name: Create Tag Artifact

run: echo "${{ steps.meta.outputs.tags }}" > tags.txt

- name: Create Tag Output

id: tag

run: echo "::set-output name=tag::$(head -n 1 ./tags.txt)"

outputs:

tag: ${{ steps.tag.outputs.tag }}

updategit:

needs: docker

runs-on: ubuntu-latest

steps:

- name: Update Manifest Repo

uses: actions/checkout@v3

with:

repository: 'kwehen/weather-argocd'

token: ${{ secrets.GIT_TOKEN }}

ref: main

- name: Install yq

run: |

wget https://github.com/mikefarah/yq/releases/latest/download/yq_linux_amd64 -O /usr/bin/yq

chmod +x /usr/bin/yq

- name: Modify Images

run: |

git config --global user.name "kwehen"

git config --global user.email "example@example.com"

cd manifest

yq -i e ".spec.template.spec.containers[0].image = \"${{ needs.docker.outputs.tag }}\"" manifest.yml

git add .

git commit -m "Updated image to ${{ needs.docker.outputs.tag }} by Gitea Actions"

git push

env:

GIT_USERNAME: ${{ secrets.GIT_USERNAME }}

GIT_PASSWORD: ${{ secrets.GIT_TOKEN }}Last will be create an app inside of ArgoCD, which can be done via the CLI or the GUI. See this YouTube video:

Once synced, I now have fully self-hosted CI/CD. From Git, continuous integration pipelines with Gitea actions, a container registry for my images to be stored with Harbor, and ArgoCD to deploy onto my Kubernetes cluster.

Blog Repository

The repository with all of the code used in this blog post can be found on my GitHub.

Nice!!